知乎给我推荐了优化算法 | Gaining Sharing Knowledge based Algorithm(附MATLAB代码),xxx优化算法实在太多了。这些优化算法的名字花里胡哨,配上一个听起来很合理的解释,包装一下,就是一个新的算法。那这些算法到底行不行呢,是骡子还是马,得拉出来溜溜。

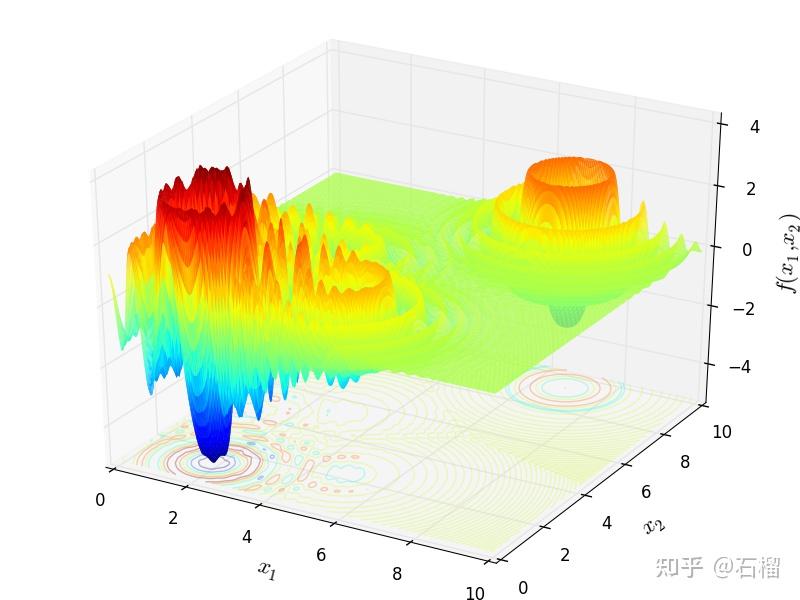

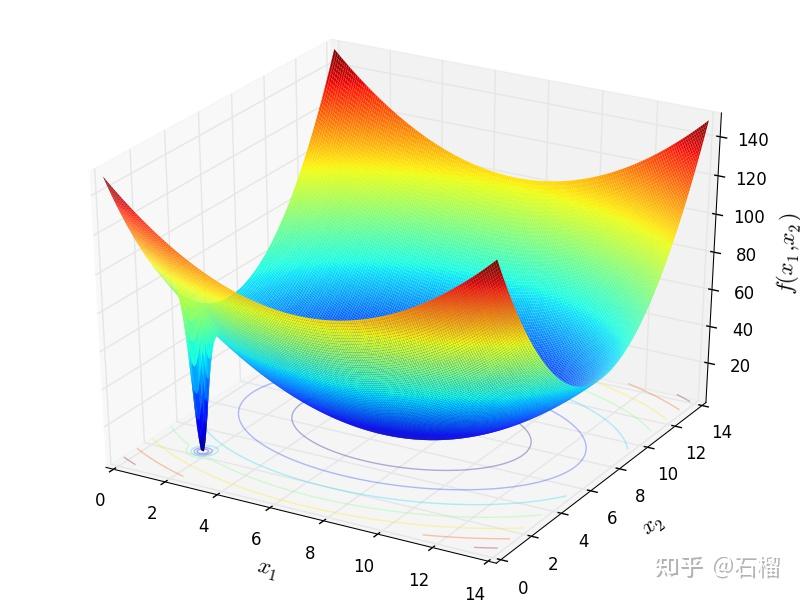

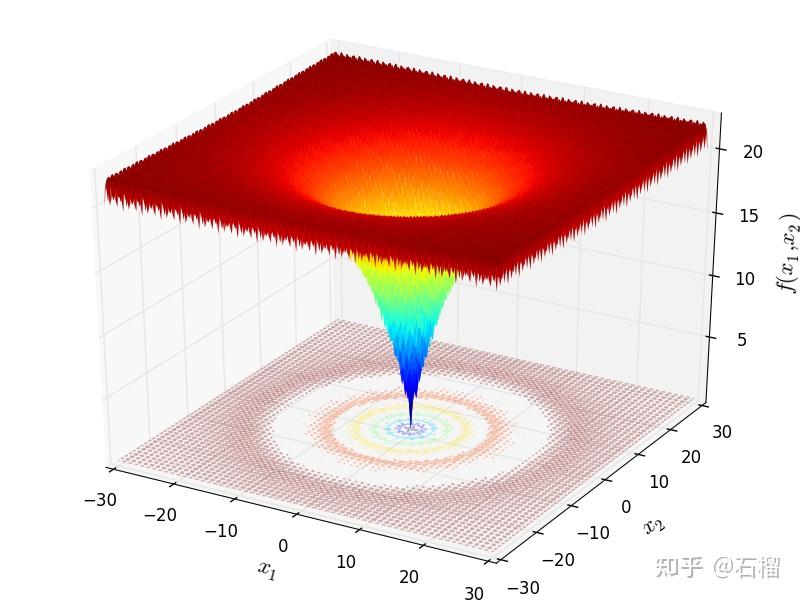

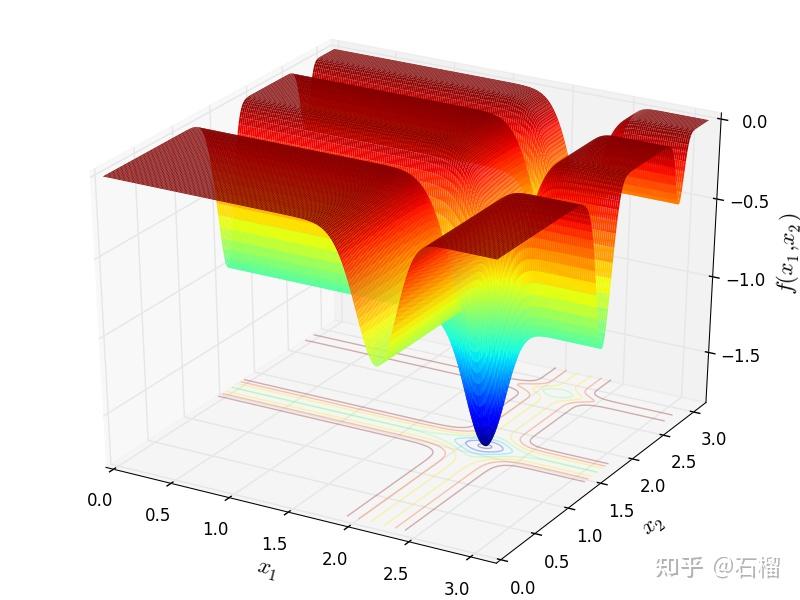

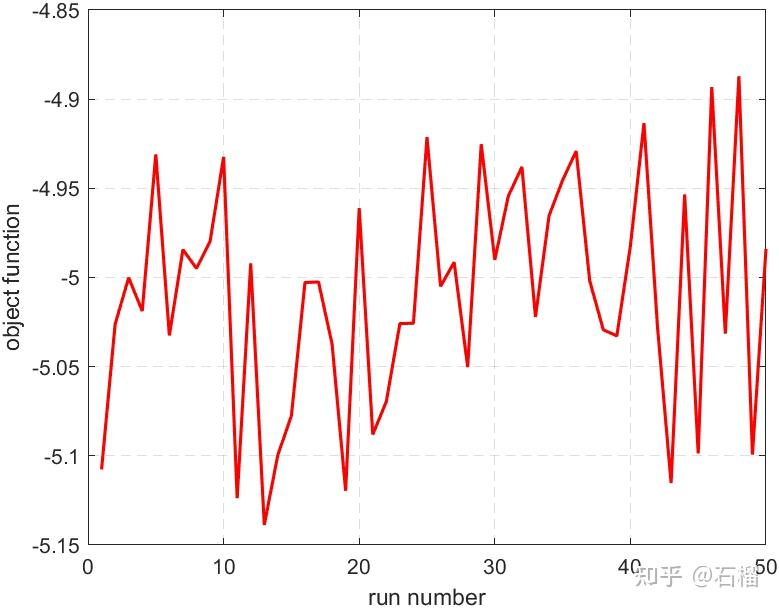

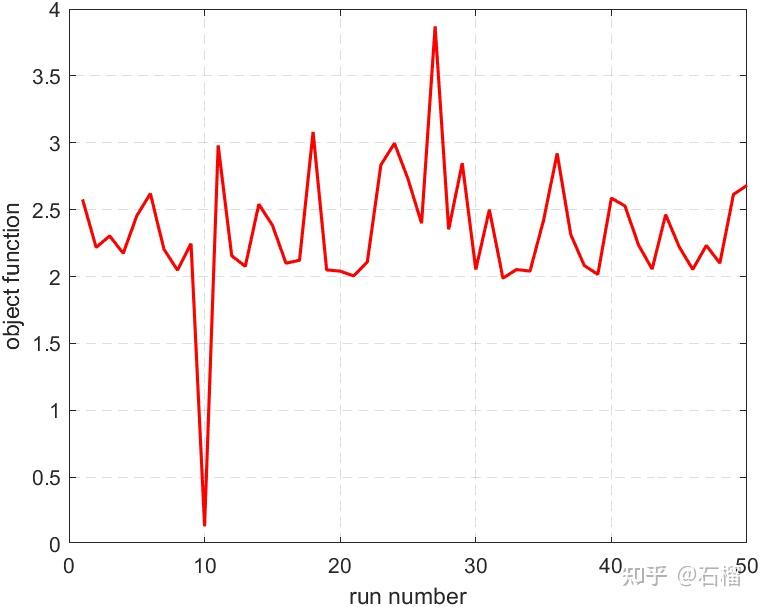

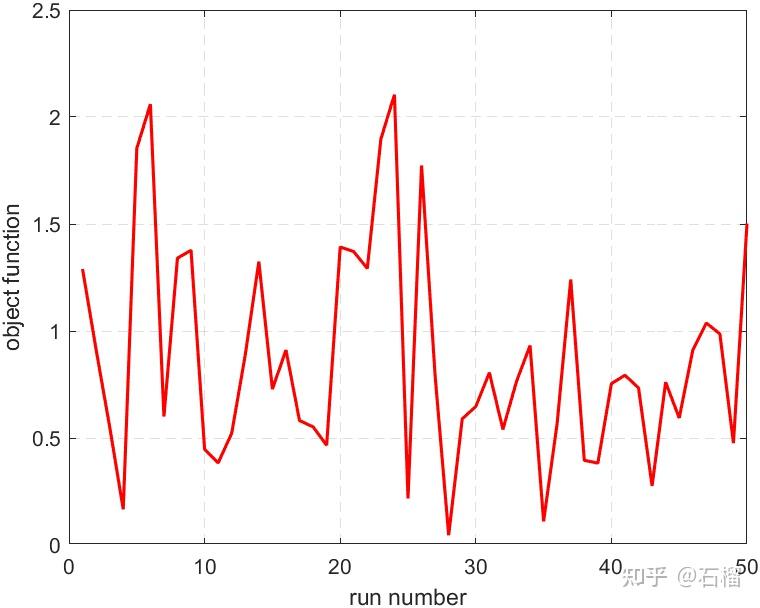

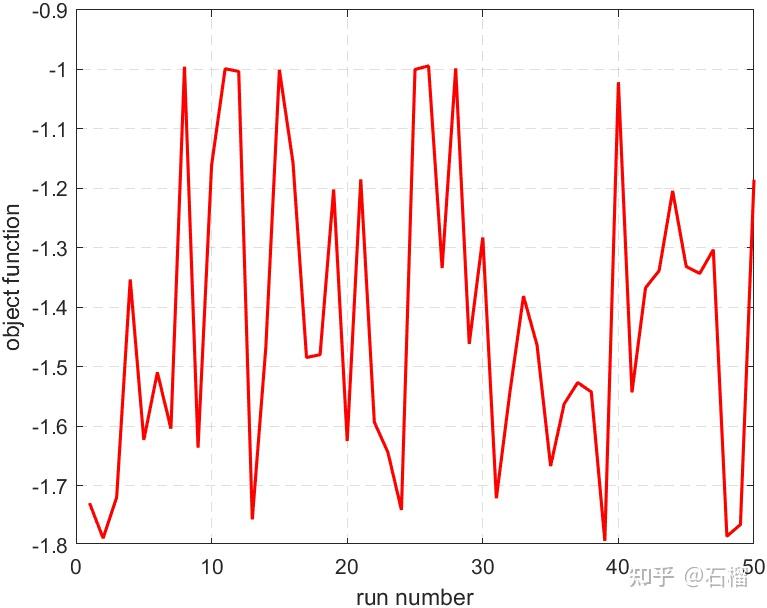

选择4个比较经典的优化测试函数:Langermann,Damavandi,Ackley,Michalewicz 作为需要被优化的目标函数。

Langermann函数定义域有一个局部极小值 ,同时还有很多正负切换的沟壑,这些也是局部极小值。Damavandi函数定义域内有一个局部极小值

,比较具有欺骗性,一旦陷入这个局部极小值,就很难跳出来了。Ackley函数图像上有不计其数的局部极小值,Michalewicz函数图像上有纵横交错的区域,这些都是陷阱。性能良好的智能优化算法,得跳出这些陷阱,找到全局最小值。

一般的,智能优化算法用于离线优化居多,所以这里的性能测试仅针对优化性能:算法能不能找到全局最小值。实时性在离线优化时并不是很重要,而且还和算法的迭代次数有关,而如何定义不同的智能优化算法一次迭代也没有一个统一的标准,所以本文的测试不涉及到智能优化算法的实时性。

以同样的的参数,分别优化上面给到的目标函数多次(50次),统计多次优化之后的目标函数最小值,并进行比较,下面给出性能测试代码:

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%% Gaining-Sharing Knowledge Based Algorithm for Solving Optimization

%% Problems: A Novel Nature-Inspired Algorithm

%% Authors: Ali Wagdy Mohamed, Anas A. Hadi , Ali Khater Mohamed

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

close all;

clear;

clc;

format long;

Alg_Name='GSK';

n_problems=1;

ConvDisp=1;

Run_No=50;%重复求解多少次

problem_size = 2;

max_nfes = 300 * 100;

rand('seed', sum(100 * clock));

val_2_reach = 10^(-8);

%% Target function

flag = 1;

if flag == 1

xmin = [0,0];

xmax = [10,10];

fun = @langermann;

elseif flag ==2

xmin = [0,0];

xmax = [14,14];

fun = @damavandi;

elseif flag == 3

xmin = [-2,-2];

xmax = [2,2];

fun = @ackley;

else

xmin = [0,0];

xmax = [pi,pi];

fun = @michal;

end

max_region = xmin(1);

min_region = xmax(2);

lu = [min_region * ones(1, problem_size); max_region * ones(1, problem_size)];

analysis= zeros(n_problems,6);

%% Core process

optimum = flag * 100.0;

%% Record the best results

outcome = [];

fprintf('\

-------------------------------------------------------\

')

fprintf('Function=%d, Dimension size=%d\

', flag, problem_size)

dim1=[];

dim2=[];

bsf_fit_var_record = cell(Run_No,1);

bsf_solution_record = cell(Run_No,1);

for run_id = 1 : Run_No

bsf_error_val=[];

run_funcvals = [];

pop_size = 100;

G_Max=fix(max_nfes/pop_size);

%% Initialize the main population

popold = repmat(lu(1, :), pop_size, 1) + rand(pop_size, problem_size) .* (repmat(lu(2, :) - lu(1, :), pop_size, 1));

pop = popold; % the old population becomes the current population

fitness = fun(mat2cell(pop, ones(size(pop, 1), 1), problem_size));

nfes = 0;

bsf_fit_var = 1e+300;

%%%%%%%%%%%%%%%%%%%%%%%% for out

for i = 1 : pop_size

nfes = nfes + 1;

%% if nfes > max_nfes; exit(1); end

if nfes > max_nfes; break; end

if fitness(i) < bsf_fit_var

bsf_fit_var = fitness(i);

bsf_solution = pop(i, :);

end

run_funcvals = [run_funcvals;bsf_fit_var];

end

%%%%%%%%%%%%%%%%%%%%%%%% Parameter settings%%%%%%%%%%

KF=0.3;% Knowledge Factor

KR=0.6;% Knowledge Ratio

K=10*ones(pop_size,1);%Knowledge Rate

g=0;

%% main loop

while nfes <= max_nfes

g=g+1;

D_Gained_Shared_Junior=ceil((problem_size)*(1-g/G_Max).^K);

D_Gained_Shared_Senior=problem_size-D_Gained_Shared_Junior;

pop = popold; % the old population becomes the current population

[valBest, indBest] = sort(fitness, 'ascend');

[Rg1, Rg2, Rg3] = Gained_Shared_Junior_R1R2R3(indBest);

[R1, R2, R3] = Gained_Shared_Senior_R1R2R3(indBest);

R01=1:pop_size;

Gained_Shared_Junior=zeros(pop_size, problem_size);

ind1=fitness(R01)>fitness(Rg3);

if(sum(ind1)>0)

Gained_Shared_Junior (ind1,:)= pop(ind1,:) + KF*ones(sum(ind1), problem_size) .* (pop(Rg1(ind1),:) - pop(Rg2(ind1),:)+pop(Rg3(ind1), :)-pop(ind1,:)) ;

end

ind1=~ind1;

if(sum(ind1)>0)

Gained_Shared_Junior(ind1,:) = pop(ind1,:) + KF*ones(sum(ind1), problem_size) .* (pop(Rg1(ind1),:) - pop(Rg2(ind1),:)+pop(ind1,:)-pop(Rg3(ind1), :)) ;

end

R0=1:pop_size;

Gained_Shared_Senior=zeros(pop_size, problem_size);

ind=fitness(R0)>fitness(R2);

if(sum(ind)>0)

Gained_Shared_Senior(ind,:) = pop(ind,:) + KF*ones(sum(ind), problem_size) .* (pop(R1(ind),:) - pop(ind,:) + pop(R2(ind),:) - pop(R3(ind), :)) ;

end

ind=~ind;

if(sum(ind)>0)

Gained_Shared_Senior(ind,:) = pop(ind,:) + KF*ones(sum(ind), problem_size) .* (pop(R1(ind),:) - pop(R2(ind),:) + pop(ind,:) - pop(R3(ind), :)) ;

end

Gained_Shared_Junior = boundConstraint(Gained_Shared_Junior, pop, lu);

Gained_Shared_Senior = boundConstraint(Gained_Shared_Senior, pop, lu);

D_Gained_Shared_Junior_mask=rand(pop_size, problem_size)<=(D_Gained_Shared_Junior(:, ones(1, problem_size))https://zhuanlan.zhihu.com/p/problem_size);

D_Gained_Shared_Senior_mask=~D_Gained_Shared_Junior_mask;

D_Gained_Shared_Junior_rand_mask=rand(pop_size, problem_size)<=KR*ones(pop_size, problem_size);

D_Gained_Shared_Junior_mask=and(D_Gained_Shared_Junior_mask,D_Gained_Shared_Junior_rand_mask);

D_Gained_Shared_Senior_rand_mask=rand(pop_size, problem_size)<=KR*ones(pop_size, problem_size);

D_Gained_Shared_Senior_mask=and(D_Gained_Shared_Senior_mask,D_Gained_Shared_Senior_rand_mask);

ui=pop;

ui(D_Gained_Shared_Junior_mask) = Gained_Shared_Junior(D_Gained_Shared_Junior_mask);

ui(D_Gained_Shared_Senior_mask) = Gained_Shared_Senior(D_Gained_Shared_Senior_mask);

children_fitness = fun(mat2cell(ui, ones(size(ui, 1), 1), problem_size));

for i = 1 : pop_size

nfes = nfes + 1;

if nfes > max_nfes; break; end

if children_fitness(i) < bsf_fit_var

bsf_fit_var = children_fitness(i);

bsf_solution = ui(i, :);

end

run_funcvals = [run_funcvals;bsf_fit_var];

bsf_fit_var_record{run_id} = [bsf_fit_var_record{run_id}; bsf_fit_var];

bsf_solution_record{run_id} = [bsf_solution_record{run_id}; bsf_solution];

end

[fitness, Child_is_better_index] = min([fitness, children_fitness], [], 2);

popold = pop;

popold(Child_is_better_index == 2, :) = ui(Child_is_better_index == 2, :);

fprintf('NFES: %d, bsf_fit: %1.6e, D_Gained_Shared_Junior: %2.2e, D_Gained_Shared_Senior: %2.2e\

', nfes/100,bsf_fit_var,problem_size*sum(sum(D_Gained_Shared_Junior))/(pop_size*problem_size),problem_size*sum(sum(D_Gained_Shared_Senior))/(pop_size*problem_size))

% bsf_fit_var_record{run_id}=[bsf_fit_var_record{run_id}; bsf_fit_var];

% bsf_solution_record{run_id}=[bsf_solution_record{run_id}; bsf_solution];

end % end while loop

bsf_error_val = bsf_fit_var - optimum;

if bsf_error_val < val_2_reach

bsf_error_val = 0;

end

% fprintf('%d th run, best-so-far error value=%1.8e\

', run_id , bsf_error_val)

outcome = [outcome bsf_error_val];

%% plot convergence figures

if (ConvDisp)

run_funcvals=run_funcvals-optimum;

run_funcvals=run_funcvals';

dim1(run_id,:)=1:length(run_funcvals);

dim2(run_id,:)=log10(run_funcvals);

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%

end %% end 1 run

%% save ststiatical output in analysis file%%%%

func = 1;

analysis(func,1)=min(outcome);

analysis(func,2)=median(outcome);

analysis(func,3)=max(outcome);

analysis(func,4)=mean(outcome);

analysis(func,5)=std(outcome);

median_figure=find(outcome== median(outcome));

analysis(func,6)=median_figure(1);

%% print statistical output and save convergence figures%%%

% fprintf('%e\

',min(outcome));

% fprintf('%e\

',median(outcome));

% fprintf('%e\

',mean(outcome));

% fprintf('%e\

',max(outcome));

% fprintf('%e\

',std(outcome));

% dim11=dim1(median_figure,:);

% dim22=dim2(median_figure,:);

%% plot bsf_fit_var

bsf_fit_var_best_record = cellfun(@min, bsf_fit_var_record);

plot(bsf_fit_var_best_record, 'r', 'Linewidth', 1.5);

xlabel('run number');

ylabel('object function');

grid on;

set(gca,'GridLineStyle','--');

fprintf('Mean and Std of solution\

');

display([mean(bsf_fit_var_best_record), std(bsf_fit_var_best_record)]);

[~, run_id_best] = min(bsf_fit_var_best_record);

figure(2)

plot(bsf_fit_var_record{run_id_best}, 'r', 'Linewidth', 1.5);

xlabel('iteration');

ylabel('object function');

grid on;

set(gca,'GridLineStyle','--');

xlim([0, pop_size*10]);

title(['bsf\\_solution: ', num2str(bsf_fit_var_record{run_id_best}(end))]);

figure(3); hold on;

scatter(bsf_solution_record{run_id_best}(:, 1), bsf_solution_record{run_id_best}(:, 2), 'MarkerFaceColor', 'y', 'MarkerEdgeColor', 'k');

scatter(bsf_solution_record{run_id_best}(end, 1), bsf_solution_record{run_id_best}(end, 2), 'MarkerFaceColor', 'b', 'MarkerEdgeColor', 'k');

xlim([bsf_solution_record{run_id_best}(end, 1)-0.5, bsf_solution_record{run_id_best}(end, 1)+0.5]);

ylim([bsf_solution_record{run_id_best}(end, 2)-0.5, bsf_solution_record{run_id_best}(end, 2)+0.5]);

grid on;

set(gca,'GridLineStyle','--');

xlabel('$x_1$', 'interpreter', 'latex');

ylabel('$x_2$', 'interpreter', 'latex');

% print bsf_fit_var and bsf_solution

if exist('bsf_fit_var', 'var') && exist('bsf_solution', 'var')

fprintf('bsf_solution: %.4f\

', bsf_fit_var_record{run_id_best}(end));

fprintf('bsf_solution: x1=%.4f\

',bsf_solution_record{run_id_best}(end, 1));

fprintf('bsf_solution: x2=%.4f\

',bsf_solution_record{run_id_best}(end, 2));

end

function lang = langermann(x)

a = [3,5,2,1,7];

b = [5,2,1,4,9];

c = [1,2,5,2,3];

lang =zeros(size(x,1),1);

for j =1:size(x,1)

s = 0;

xj = x{j};

x1 = xj(1);

x2 = xj(2);

for i = 1:5

zi = (x1-a(i)).^2 + (x2 - b(i)).^2;

s = s - c(i)*cos(pi.*zi)https://zhuanlan.zhihu.com/p/exp(zihttps://zhuanlan.zhihu.com/p/pi);

end

lang(j) = s;

end

end

function dama = damavandi(x)

dama = zeros(size(x,1),1);

for j = 1:size(x,1)

xj = x{j};

x1 = xj(1);

x2 = xj(2);

dama(j)=(1-(abs((sin(pi*(x1-2))*sin(pi*(x2-2)))/(pi*pi*(x1-2)*(x2...

-2)))).^5)*(2+(x1-7).^2+2*(x2-7).^2);

end

end

function ackley = ackley(x)

ackley =zeros(size(x,1),1);

for j =1:size(x,1)

xj = x{j};

x1 = xj(1);

x2 = xj(2);

e = exp(1);

s = 20 + e -20.*exp(-0.2*sqrt((x1^2+x2^2)/2)) - exp(1/2*(cos(2*pi*x1)+cos(2*pi*x2)));

ackley(j) = s;

end

end

function mi = michal(x)

mi = zeros(size(x,1),1);

for j = 1:size(x,1)

xj = x{j};

x1 = xj(1);

x2 = xj(2);

s = sin(x1)*sin(x1^2/pi)^20 + sin(x2)*sin(2*x2^2/pi)^20;

mi(j) = -s;

end

end| 目标函数 | 全局最小值 | 局部极小值 | 优化均值 | 优化标准差 |

|---|---|---|---|---|

| Langerman | ?5.1621 | -3 | -5.00919 | 0.06424 |

| Damavandi | 0 | 2 | 2.33586 | 0.48733 |

| Ackley | 0 | - | 0.87281 | 0.50766 |

| Michalewicz | -1.8013 | - | -1.41839 | 0.25701 |

测试的算法为Gaining Sharing Knowledge based Algorithm,测试的4个目标函数中,只有对Langerman函数的优化接近其全局最小值,其余的三个函数,50次中最多只有3次优化结果比较接近全局最小值,全局最优解命中率比较低。从50次求解的结果来看,每次求解的结果不一致,波动比较大。

各种层出不穷的智能优化算法或多或少都有以下问题: